The scale of the data we are amassing today is a growing but exciting challenge for data-driven organizations. Logs from millions of users, IoT and sensor data, high-volume click-stream information, and more, the data universe is expanding at an unprecedented rate. FYI…in 2020, people created 1.7 MB of data every second. You can now guess how much data we generate on day-to-day basis.

Imagine you have at your fingertips petabytes of data you need to get actionable insights in days not in months. How would you use it— for analyzing sales trends, identifying low-performing assets, improving the experience with personalized offerings, or averting specific threats?

Obviously, it won’t be possible to extract valuable information available in the data cluster manually or even with traditional tools. Doing so will eat up months or perhaps years. Till then, you may lose the competitive edge and/or the threat would have already occurred.

To handle massive data in real-time, you need tools that can process a billion or more records in a second.

Azure Data Explorer

Microsoft Azure Data Explorer (ADX) one such tool that can ingest and store data within its database and perform analysis on petabyte-masses of structured and unstructured data. It is a cloud-based big data analytics platform that helps you handle the many data streams such as log, telemetry, and streaming data generated by disparate systems.

Azure Data Explorer is ideal for analyzing large volumes of diverse data from any data source, such as websites, applications, IoT devices, and more. You can query terabytes of data in just seconds and visualize data with its in-built dashboards, charts, pivots, and more. Microsoft claims that ADX can “query billions of records in seconds.”

Following are the key stages at a high level that occur in ADX while processing the aforementioned high-volume datasets.

Kusto Query Language (KQL)

Kusto query language (KQL) is used to explore and analyze fast-moving data stored in Azure Data Explorer (ADX) coming from various sources such as apps, IoT devices, sensors, and more.

Based on relational database management systems (RDBMS), KQL supports entities such as databases, tables, and columns. It can easily handle structured, semi-structured (for example, JSON-like nested types), and unstructured (free text) data.

How to Perform Kusto Queries

Kusto query language is primary means of interaction. KQL allows you to send data queries, and use control commands to manage entities, discover metadata, and so on. Both queries and control commands are short textual “programs”. Kusto queries can use the SQL language, or the Kusto query language.

As an example, the following query counts how many rows in the Logs table have a value in the Level column equal to the string Critical:

Logs

| where Level == "Critical"

| count

Control commands

Control commands are requests to Kusto to process and potentially modify data or metadata. For example, the following control command creates a new Kusto table with two columns, Level and Text:

.create table Logs (Level:string, Text:string)KQL is ideal for finding patterns/trends, identifying anomalies, building statistical model and more. You can apply it for different scenarios such as:

- Exploring massive set of data

- Performing real time analytics on streaming data

- Analyzing rapidly moving data/time series

Example: Analyzing fast moving COVID-19 dataset

Amongst the above scenarios, suppose you want to analyze fast-moving datasets. The best example is the Covid-19 pandemic, where new data gets added every day.

For example, you want to explore a huge COVID-19 dataset for future scenario planning, answering Covid-related queries, and telling stories with data. Or you want to check Covid active cases in the USA across the years/quarters using COVID-19 (Coronavirus) dataset. Let us run the query for the later example.

Covid19_Bing

| where Location.CountryCode == "US"

| extend stateCode= tostring(Location.StateCode)

| where stateCode != ""

| extend yr= datetime_part("Year", ReportDate), qtr= datetime_part("Quarter", ReportDate)

| extend yrQtr = strcat(yr, "Q", qtr)

| extend activeCases = toint(Active.Value)

| summarize activeCasesCount = sum(activeCases) by stateCode, yrQtr

| where activeCasesCount > 10000

| top 10 by activeCasesCount

| render columnchart with (ytitle="Active Cases Count By Yr/QTR", xtitle="States", legend=hidden)

* The data is not current and used for demonstration purposes only.

Beyond the above scenario, you can apply KQL for apps insights, log analytics, threat analysis, and security center. The possibilities are unlimited.

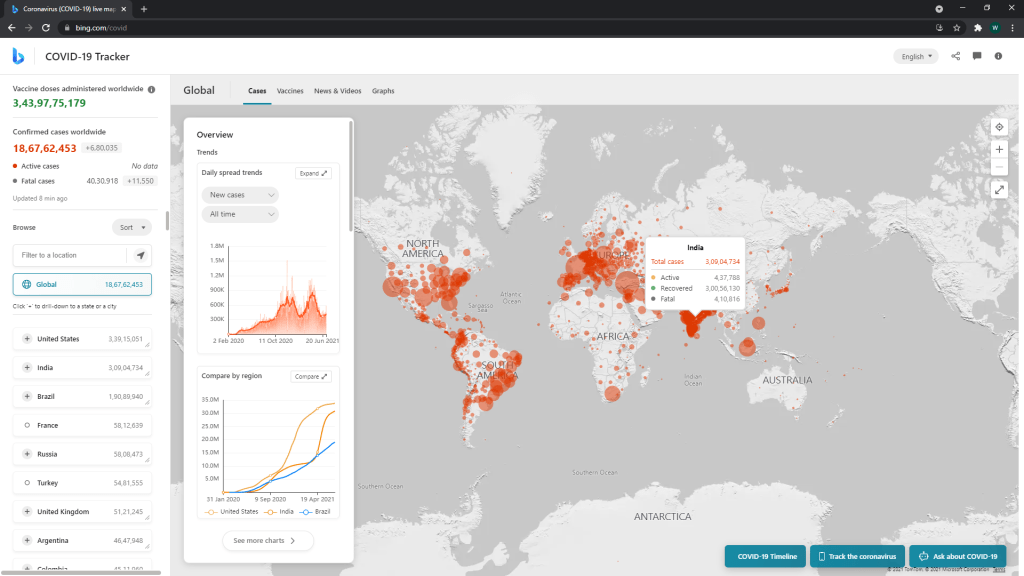

Data analysts and business analysts can simplify the path from data to insights for desired business outcomes. Even they can connect Azure Data Explorer to Power BI to visualize data and drive actions. Following is a screenshot of a similar implementation available here:

As one can imagine, the KQL landscape is moving fast and evolving quickly. Microsoft has taken an ‘inclusive’ approach like other technologies and extended its capabilities to other languages as well. Amongst other things, one good example could be releasing a Python library targeted at data scientists.

This makes KQL an excellent tool for multiple use cases. One can use them for solving complex data science problems as well as for self-service BI. Imagination is the only limit.